Table of Contents

The convergence of game theory and large language models (LLMs) represents one of the most intriguing intersections in modern artificial intelligence. While game theory has long been the mathematical backbone for understanding strategic interactions between rational agents, its application to LLMs opens up fascinating questions about AI behavior, training dynamics, and the future of human-AI collaboration.

The Foundation: What is Game Theory?

Game theory is the mathematical study of strategic decision-making among rational agents. Developed by John von Neumann and Oskar Morgenstern, it provides a framework for analyzing situations where the outcome for each participant depends not only on their own decisions but also on the decisions of others.

Core Concepts in Game Theory:

- Players: The decision-makers in the game

- Strategies: The available actions each player can take

- Payoffs: The outcomes or rewards associated with different strategy combinations

- Nash Equilibrium: A state where no player can improve their payoff by unilaterally changing their strategy

LLMs as Strategic Agents

Large language models, while not traditionally conceived as game-theoretic agents, exhibit behaviors that can be analyzed through a strategic lens. When an LLM generates responses, it’s essentially making strategic choices about what information to provide, how to frame responses, and which linguistic strategies to employ.

LLM Strategic Behaviors:

Response Selection: When faced with ambiguous queries, LLMs must choose from multiple possible interpretations and responses. This choice reflects a strategic decision about what the user most likely wants to hear.

Information Revelation: LLMs constantly make decisions about how much information to reveal, how detailed to be, and what level of complexity to employ in their explanations.

Conversation Management: In multi-turn conversations, LLMs demonstrate strategic behavior in maintaining context, building on previous exchanges, and guiding the conversation toward helpful outcomes.

Game Theory in LLM Training

The training process of LLMs inherently involves game-theoretic elements, particularly in reinforcement learning from human feedback (RLHF) methodologies.

The Training Game:

- Players: The model (learning agent) and human evaluators (feedback providers)

- Strategies: Different response generation approaches for the model; various evaluation criteria for humans

- Payoffs: Reward signals that guide model behavior toward human-preferred outputs

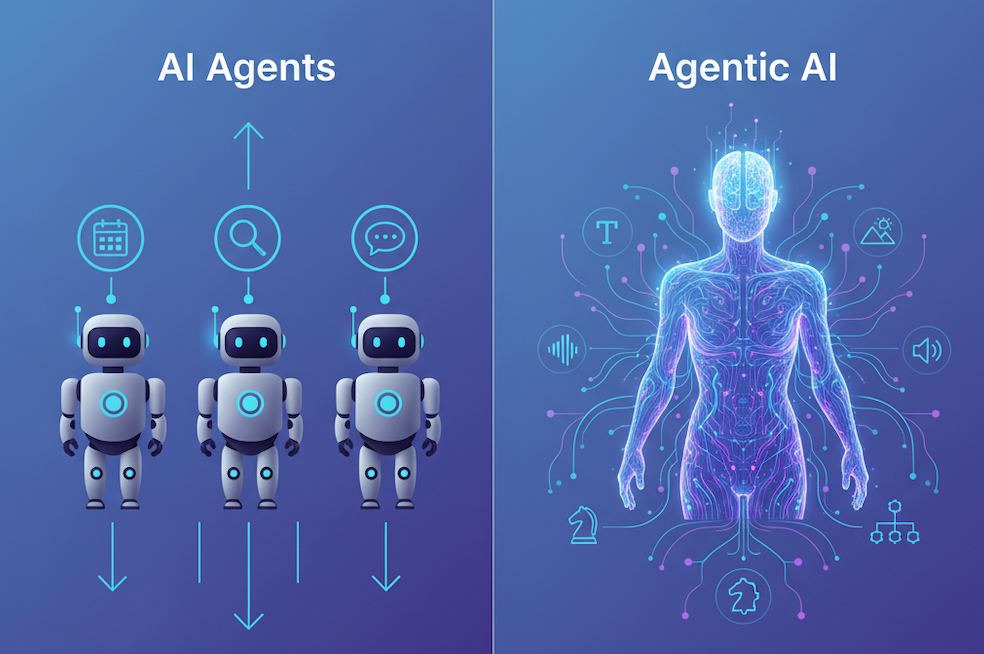

Multi-Agent LLM Systems

The emergence of multi-agent LLM systems creates fascinating game-theoretic scenarios where multiple AI agents interact, compete, or collaborate.

Competitive Scenarios: Debate Systems: When multiple LLMs are tasked with arguing different sides of an issue, they must develop strategies to present compelling arguments while anticipating and countering opposing viewpoints.

Resource Competition: In scenarios where multiple LLMs compete for limited computational resources or user attention, game-theoretic principles help predict and optimize their behavior.

Information Markets: LLMs operating in information-rich environments must strategically decide what information to share, withhold, or emphasize based on the competitive landscape.

Quality Competition: Multiple LLMs competing for user preference must balance response quality, speed, and accuracy to maintain competitive advantage.

Collaborative Scenarios: Consensus Building: Multiple LLMs working toward a common goal must balance individual optimization with collective benefit, embodying classic cooperative game theory principles.

Specialization Games: Different LLMs may develop complementary specializations, creating a division of labor that maximizes overall system performance.

Task Allocation: In collaborative environments, LLMs must strategically negotiate task distribution to optimize both individual performance and collective outcomes.

Human-AI Strategic Interactions

The interaction between humans and LLMs can be modeled as a complex game where both parties have strategies, preferences, and incentives.

The Human-AI Game:

- Human Strategies: Query formulation, prompt engineering, feedback provision, and usage patterns

- AI Strategies: Response generation, information filtering, personalization, and engagement optimization

- Mutual Payoffs: User satisfaction, task completion, learning outcomes, and system improvement

Practical Applications and Case Studies

Constitutional AI and Alignment: Game theory provides insights into how LLMs can be trained to follow constitutional principles while maintaining usefulness. The tension between helpfulness and harmlessness creates a classic multi-objective optimization problem that game theory helps navigate.

Multi-Objective Training: LLMs must balance competing objectives like accuracy, safety, and user satisfaction, creating a complex optimization landscape that game theory can help navigate.

Adversarial Robustness: The adversarial training of LLMs resembles a two-player zero-sum game where one player (the adversary) tries to fool the model while the other player (the model) tries to maintain robust performance. This game-theoretic framing has led to improved training methodologies.

Market Dynamics: In competitive LLM markets, providers must strategically balance model capabilities, pricing, and accessibility. Game theory helps predict market outcomes and optimal competitive strategies.

Prompt Engineering as Strategy: Users engaging with LLMs through prompt engineering are essentially playing a game where they must strategically craft inputs to elicit desired outputs. Understanding this as a strategic interaction can improve both user techniques and model design.

Challenges and Limitations

Rational Agent Assumption: Traditional game theory assumes rational agents, but LLMs may not always behave rationally in the game-theoretic sense, particularly when dealing with edge cases or conflicting objectives.

Information Asymmetry: The complexity of LLM decision-making processes creates significant information asymmetries that complicate game-theoretic analysis.

Dynamic Strategy Evolution: Unlike traditional game theory scenarios, LLM strategies can evolve through learning, making static equilibrium analysis less applicable.

Computational Constraints: Real-world LLM deployment involves computational limitations that affect strategic choices, adding complexity to traditional game-theoretic analysis.

Future Directions

Evolutionary Game Theory: As LLMs continue to evolve and adapt, evolutionary game theory may provide better frameworks for understanding their long-term strategic behavior and population-level dynamics.

Algorithmic Game Theory: The computational aspects of game theory become crucial when analyzing LLM behavior, as these systems operate under computational constraints that affect their strategic choices.

Mechanism Design: The principles of mechanism design can inform how to structure interactions between humans and AI systems to achieve desired outcomes while accounting for strategic behavior.

Cooperative AI: Game theory research is increasingly focusing on how to design AI systems that can effectively cooperate with humans and other AI systems, moving beyond competitive frameworks.

Conclusion

The intersection of game theory and large language models reveals a rich landscape of strategic interactions that fundamentally shape how these systems behave, learn, and interact with humans. From the training process to deployment scenarios, game-theoretic principles provide valuable insights into optimizing AI behavior and predicting system outcomes.

As LLMs become more sophisticated and ubiquitous, understanding their behavior through a game-theoretic lens becomes increasingly important. This perspective not only helps us design better AI systems but also enables more effective human-AI collaboration by recognizing the strategic nature of these interactions.

The future of AI development will likely see even deeper integration of game-theoretic principles, as we move toward more complex multi-agent systems and sophisticated human-AI partnerships. By embracing this strategic perspective, we can work toward AI systems that are not only more capable but also more aligned with human values and objectives.

Whether we’re training models, designing user interfaces, or creating multi-agent systems, game theory provides a powerful framework for understanding and optimizing the complex strategic landscape of modern artificial intelligence. The strategic perspective will become increasingly critical as AI systems become more autonomous and interact more frequently with both humans and other AI agents.

Start the conversation