Table of Contents

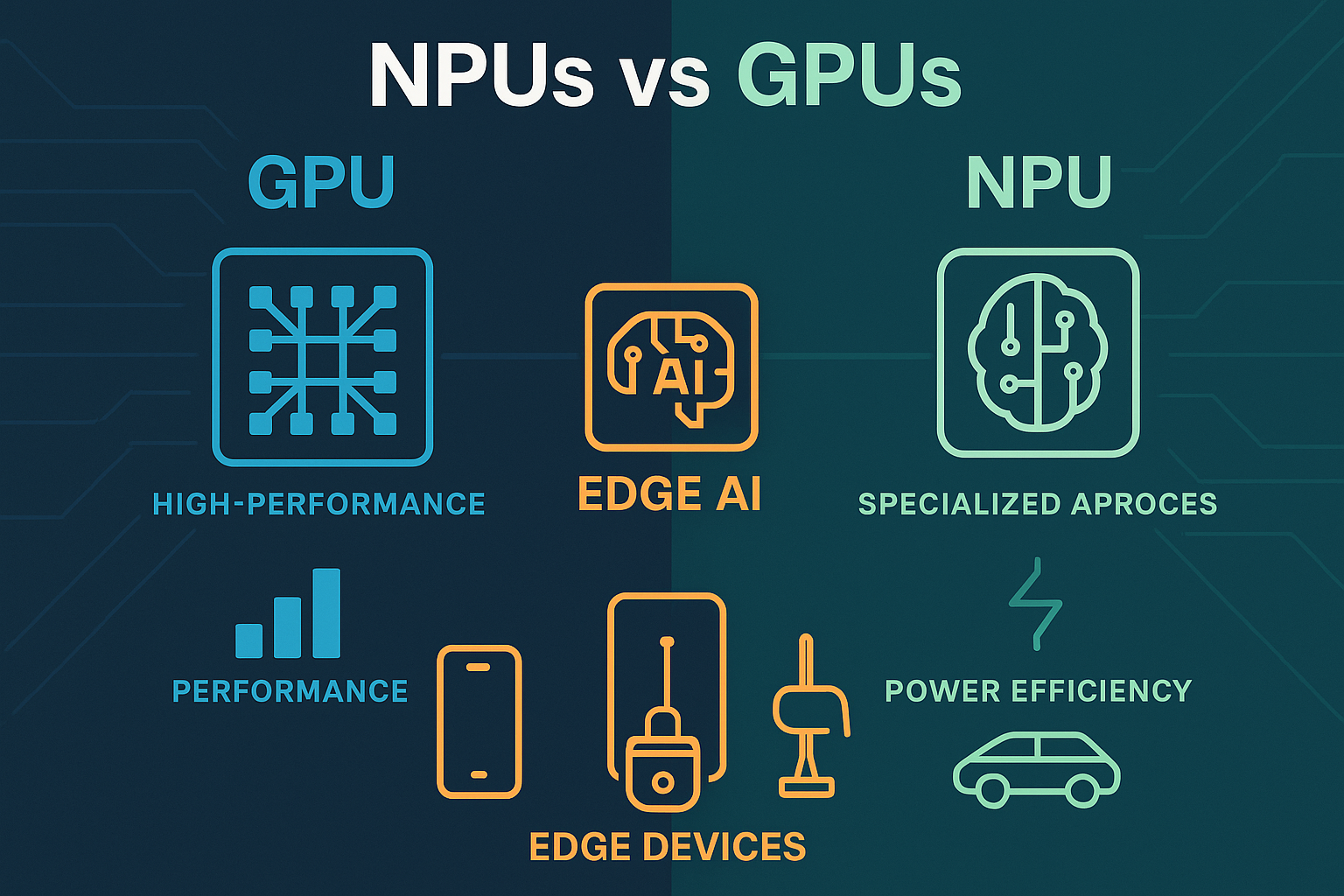

The artificial intelligence revolution is moving beyond the cloud and data centers, migrating directly to the devices we use every day. This shift toward edge AI is fundamentally changing how we think about processing power, energy efficiency, and real-time intelligence.

The Edge AI Imperative

Latency Reduction: Processing data locally eliminates the round-trip time to cloud servers, enabling real-time applications like autonomous navigation and augmented reality.

Privacy Protection: Sensitive data can be processed locally without ever leaving the device, addressing growing privacy concerns.

Bandwidth Optimization: Local processing reduces the amount of data that needs to be transmitted over networks.

Reliability Enhancement: Edge processing enables continued operation even when network connectivity is poor or unavailable.

Cost Efficiency: For high-volume applications, local processing can be more cost-effective than paying for cloud computing resources over the device’s lifetime.

Understanding GPUs in the AI Context

Graphics Processing Units (GPUs) weren’t originally designed for AI workloads, but their parallel processing architecture made them surprisingly effective for machine learning tasks.

GPU Strengths for AI:

- Parallel Processing Power: GPUs excel at performing thousands of simple calculations simultaneously

- Mature Ecosystem: Decades of development have created robust software frameworks

- Flexibility: GPUs can handle a wide variety of AI models and architectures

- Scalable Performance: High-end GPUs can deliver substantial computational power

- Proven Track Record: The AI industry has extensive experience optimizing models and applications for GPU architectures

The Rise of NPUs: Purpose-Built for AI

Neural Processing Units (NPUs) represent a fundamental shift in processor design philosophy. Rather than adapting existing architectures for AI workloads, NPUs are designed from the ground up specifically for neural network operations.

NPU Design Principles:

- AI-Optimized Architecture: Every aspect optimized for neural network computational patterns

- Energy Efficiency: Designed to maximize performance per watt

- Specialized Data Paths: Dedicated pathways for common AI operations

- Fixed-Point Arithmetic: Lower-precision arithmetic for speed and energy improvements

- On-Chip Memory Optimization: NPU designs often include specialized memory architectures that minimize data movement

Use Case Analysis: Choosing the Right Architecture

Smartphone AI Applications: NPUs have become the dominant choice for smartphone AI features like camera enhancement, voice recognition, and predictive text due to low power consumption.

Autonomous Vehicles: The automotive industry is adopting a hybrid approach, using high-performance GPUs for complex perception tasks while employing NPUs for specific, well-defined tasks.

Industrial IoT: Edge AI in industrial settings often favors NPUs for their reliability, predictable performance, and energy efficiency.

Smart Home Devices: Consumer IoT devices typically choose NPUs for their low power consumption and cost-effectiveness.

Making the Decision: Key Factors

Performance Requirements: Assess whether your application needs maximum computational power (favor GPU) or optimized efficiency for specific models (favor NPU).

Power Constraints: Battery-powered or thermally constrained devices typically benefit more from NPU architectures.

Model Flexibility: If you need to support diverse or evolving AI models, GPUs offer more flexibility.

Development Timeline: Consider your team’s existing expertise and the maturity of development tools for each platform.

Integration Requirements: Consider how well each option integrates with your existing system architecture and other components.

The Hybrid Future

Rather than a winner-take-all scenario, the future of edge AI involves sophisticated orchestration between different processing architectures. Advanced edge devices may incorporate multiple processing units, intelligently routing different aspects of AI workloads to the most appropriate processor.

Conclusion

The choice between NPUs and GPUs for edge AI isn’t simply about picking the “better” technology—it’s about selecting the right tool for your specific requirements and constraints. Understanding these trade-offs will be crucial for organizations looking to harness the transformative potential of AI at the edge.

Start the conversation