Table of Contents

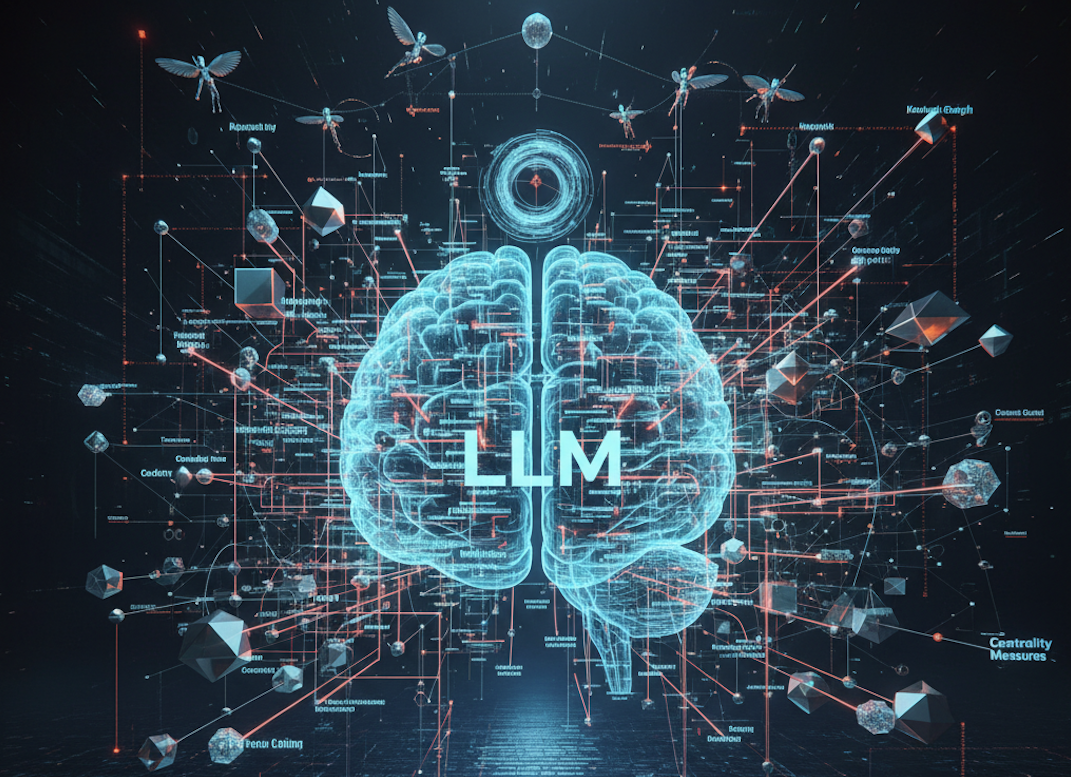

When we interact with large language models (LLMs) like GPT, Claude, or LLaMA, we’re witnessing the culmination of decades of research in artificial intelligence, natural language processing, and computational linguistics. However, beneath the surface of these seemingly magical text generators lies a sophisticated mathematical framework rooted in graph theory.

The Foundation: What is Graph Theory?

Graph theory is a branch of mathematics that studies structures made up of vertices (nodes) and edges (connections). In its simplest form, a graph consists of points connected by lines, but this simple concept has profound applications across computer science.

Key Graph Concepts in AI:

- Nodes (Vertices): Represent entities like words, concepts, or data points

- Edges: Represent relationships, similarities, or transitions between entities

- Directed vs Undirected: Whether relationships flow in one direction or both

- Weighted Graphs: Where connections have associated strengths or probabilities

- Subgraphs: Smaller graph structures within larger ones

- Hierarchical Graphs: Multi-level structures representing different abstraction levels

Language as a Graph Structure

Natural language inherently possesses graph-like properties that make graph theory a natural fit for language modeling:

Syntactic Relationships: Words in sentences form dependency trees and syntactic structures that are fundamentally graph-based.

Semantic Networks: Concepts and their meanings form complex networks of associations.

Discourse Structure: Even at the document level, ideas connect through references, logical flow, and thematic coherence—all graph-like properties.

Graph Theory in Transformer Architecture

The revolutionary Transformer architecture that powers most modern LLMs is fundamentally built on graph-theoretic principles:

Attention as Graph Connectivity: The attention mechanism in Transformers can be viewed as creating a complete graph where every token is connected to every other token in the sequence.

Multi-Head Attention as Multiple Graph Views: Multiple attention heads allow the model to create different “views” of the same input graph, each potentially capturing different types of relationships.

Layer Stacking as Graph Refinement: Each transformer layer can be seen as refining the graph representation, with early layers capturing more basic relationships and deeper layers building increasingly abstract connections.

Graph Neural Networks Meet Language Models

The convergence of Graph Neural Networks (GNNs) and language models represents a powerful synthesis:

Graph Convolutional Networks (GCNs): These operate by aggregating information from neighboring nodes, similar to how language models aggregate contextual information from surrounding words.

Message Passing: The core concept of GNNs where nodes exchange information with their neighbors mirrors how attention mechanisms allow different parts of a sequence to communicate.

Node Embeddings: Both GNNs and language models learn to represent entities (nodes/tokens) in high-dimensional spaces where similar entities cluster together.

Conclusion

Understanding the graph-theoretic foundations of LLMs has practical implications for information retrieval, question answering, and text generation. The success of graph theory in language modeling reflects a deeper truth about the mathematical nature of language and thought.

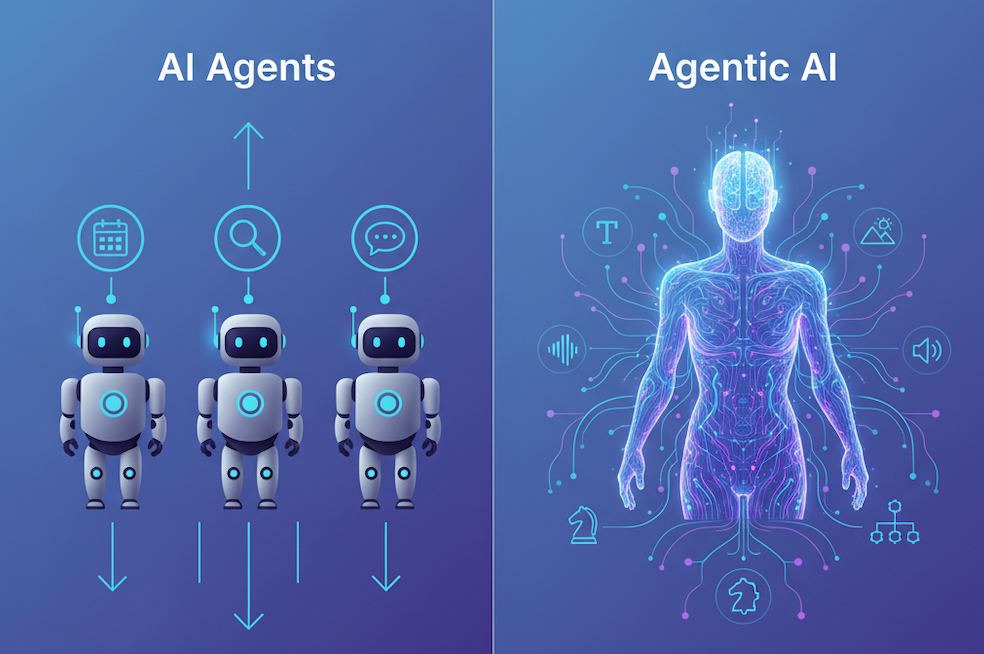

These graph-theoretic principles also intersect with strategic decision-making in multi-agent scenarios, where the relationships between different AI systems can be modeled as complex strategic networks.

Start the conversation